This page contains an annotated list of my publications/explanations about the 2023 surge of attention (hype is not always a wrong word here) society paid to Artificial Intelligence, mostly because of the impact of the public release of ChatGPT in the fall of 2022 by OpenAI.

Why did I create these? (Skip if you like)

On 10 October 2023, I gave a 40 minute presentation explaining Large Language Models (LLMs) to the Enterprise Architecture and Business Process Management Conference Europe 2023 in London. The goal was to make what really happens in these models — without unnecessary technical details — clear to the audience, so they would be able to advise about them. After all, many people want to know what to think of all the reporting, and they look at their ‘trusted IT advisors’ for advise.

I had a bit of a head start for such a deep dive, as I had worked for a university computer coaches institute in the late 1980’s and a language technology company in the early 1990’s, a company that came out of one of the best run (but still unsuccessful) research projects on automated translation in the 1980’s. That company had learned valuable lessons from that unsuccessful attempt, and was one of the first companies that pioneered the use of statistics (in our case word statistics) in language technology. We built a successful indexing system for a national newspaper at a fraction of the maintenance and development cost of existing expert-system-like systems such as that (LISP-based) one from CMU that was used by Reuters. From that moment on, I’ve been keeping tabs on AI development, which meant that I could move quickly.

Creating the talk was still a lot of ‘work’, as I had to consume a lot of information from scientific articles, white papers, YouTube videos (I watched the entire 2023 version of the MIT lectures, for instance), to make sure I would understand everything a few levels deeper than the level I would talk at, and that my insight was up to date and correct. So, I do understand what multi-head attention in a transformer is, I know how those vector and matrix calculations and activation functions work, what the importance of a KV-cache is, etc., even if none of that shows up in my presentation or writings. These technical details aren’t necessary for people to understand what is going on, they are necessary for me so that I know what I tell people probably isn’t nonsense.

In fact, I quickly found out the information available on Generative AI was generally not helpful at all for understanding by the general public. There were many of those deep technical explanations (like those MIT lectures) which were completely useless for a management level. There were many pure-hype stories not based on any real understanding, but that were full of all sorts of (imagined or impossible) uses and extrapolations. Even scientific papers were full of weaknesses (e.g. conclusions based on not applying statistics correctly). There were critical voices about the hype and sloppiness, but these were a very small minority. Basically, I did not find anything that did what I could copy/use, so I created my own (since then, I have come across some others I consider ok, but not an overview like this).

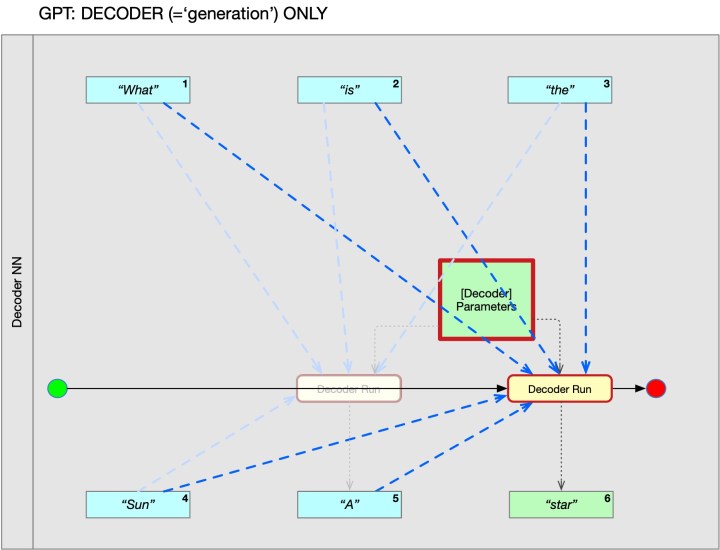

I noticed there were a few very essential characteristics of these systems that are generally ignored or simplified. These omissions/simplifications have a very misleading effect. So I decided I should specifically address them. One is that people experience that they are in a question/answer game with ChatGPT, but that is actually false, it only looks that way to us. The other is that people think that LLMs work on ‘words’ but this is a simplification (what Terry Pratchett has called “A Lie To Children”) that hides an essential insight. Another one wasn’t part of the talk, the misleading use of in-context learning (explained in one of the articles below).

The feedback on these publications so far has been very positive (people really think it helps to get a much better understanding) but they are not (yet?) spread far and wide (the talk has seen about 3000 views by end of 2023). You’ll find the entire talk (YouTube) at the end of this overview page. The articles will often expand on the talk, the talk is probably easier to be taken along the steps of basic understanding (but the articles will work as well).

Of course, as most authors/speakers, I like to entertain. A primary goal of all my public activities is therefore always to entertain you. It must be fun to read. And, it turns out, simplifying your message to the level of four-year olds (as you are advised to do often when talking about technical stuff) is bad. Especially if you’re the ‘graveyard shift’ after lunch. The reverse is true: challenge your audience, and they are entertained. “I did not understand any of it,” someone told me in the 1990’s when I had given an (AI) talk at a university somewhere, “but is was mighty fun”. That’s not good either, but preferable to ‘boring’.

So, enjoy.

Here we go:

Before digging into the technical ‘details’, it might be enlightening to dig into the conviction held by many in the AI world: that what is happening now is a step on the road to ‘machines as intelligent as humans’ (AGI). It is why OpenAI (the company behind ChatGPT) was founded: to create AGI that is ‘safe’ and ‘beneficial’ for humanity. When you dig into the history, there are a couple of funny surprises. History doesn’t repeat itself, but boy does it rhyme.

Artificial General Intelligence is Nigh! Rejoice! Be very afraid!

Should we be hopeful or scared about imminent machines that are as intelligent or more than humans? Surprisingly, this debate is even older than computers, and from the mathematician Ada Lovelace comes an interesting observation that is as valid now as it was when she made it in 1842.

Keep readingErik J. Larson, the author of The Myth of AI, asked me to write a guest post on his Substack, explaining those two main and key misleading omissions/simplifications from my talk that are in almost every other explanation out there. and comment on the OpenAI boardroom drama.

Gerben Wierda on ChatGPT, Altman, and the Future

Hi All,

The world is atwitter right now with the conflict between Sam Altman and the board of OpenAI. Gary Marcus is posting about it almost daily, and the ink getting spilled over it is considerable, and for good reason. On Colligo, we too are “following the story.” It’s my honor to publish a wonderful guest post from Gerben Wierda, who can put the Altman shocker in a broader and helpful context.

Most people are aware that ChatGPT and Friends make the ‘occasional error’. This post explains how these errors come from the essential mechanism of these systems and that — as Sam Altman, OpenAI’s CEO has said (but who listens to him when he says true things like that) — these aren’t ‘errors’ at all and talking about them as ‘errors’ actually misleads us.

The hidden meaning of the errors of ChatGPT (and friends)

We should stop labelling the wrong results of ChatGPT and friends (the ‘hallucinations’) as ‘errors’. Even Sam Altman — CEO of OpenAI — agrees, they are more ‘features’ than ‘bugs’ he has said. But why is that? And why should we not call them errors?

Keep readingGoogle’s Gemini has arrived and its marketing blitz contains some hidden gems, above all a good start for explaining the other key ‘overlooked’ element when discussing Large Language Models: the ‘learning’ that happens when a LLM uses the prompt. It turns out that many benchmarks are reported, not based on what the model can do on its own, but what it can do ‘with help’. Another gem is that Google’s information enables us to calculate the chance of GPT4 or Gemini producing a correct small program (instead of a correct code snippet). Lies, Big Lies, Statistics, Benchmarks, and more Shenaningans.

State of the Art Gemini, GPT and friends take a shot at learning

Google’s Gemini has arrived. Google has produced videos, a blog, a technical background paper, and more. According to Google: “Gemini surpasses state-of-the-art performance on a range of benchmarks including text and coding.”

But hidden in the grand words lies another generally overlooked aspect of Large Language Models which is important to understand.

And…

Keep readingTraditionally, AI has been separated into ‘Narrow’ and ‘General’ AI, the latter standing for true intelligence: AGI (Artificial General Intelligence). AGI stands for systems being flexibly intelligent in the way humans are, so for instance being able to master a new skill.

OpenAI’s Sam Altman has claimed (Jan 2025) “we know how to build AGI”. OpenAI’s GPT-o3 has done remarkably well on a special version (ARC-AGI-PUB) of one of a test (ARC-AGI) that must be mastered for a system to be able to claim AGI.

GPT is not narrow, as in Chess programs and such (it actually often does worse than the best narrow AI systems), and it has good-enough results in a wide area of uses. ChatGPT and Friends have been claimed to have ‘sparks of AGI’ or be ’emerging AGI’ (e.g. by Google DeepMind), but it looks more that thanks to the current revolution in large statistical AI, we need a third category: ‘Wide’ AI. Not narrow, but not general, and what is more: it is missing the essential characteristics to grow into general as well.

A key element that could be missing — even for something like self-driving cars — is actualliy imagination.

Let’s call GPT and Friends: ‘Wide AI’ (and not ‘AGI’)

GPT-3o has done very well on the ARC-AGI-PUB benchmark. Sam Altman has also claimed OpenAI is confident that it can build Artificial General Intelligence (AGI). But that may be based on confusions around ‘learning’. On the difference between narrow, general and (introducing) ‘wide’ AI.

Keep readingNow that we have looked at the the two different modes of ‘learning’ of neural nets (basic — setting the model parameters from training data — and in-context-learning — learning from the prompt) we can read about a very informative research paper three Microsoft researchers published. In that paper, they unveil a new ‘jailbreaking’ framework. ‘Jailbreaking’ is trying to circumvent the fine-tuning of a model, especially the fine-tuning that is supposed to make it ‘safe’ to use (“harmless”), e.g. that prevents it from providing recipes for toxins or instructions for self-harm. The framework they unveil is called Crescendo, and it works by pitting one mode of ‘learning’ (in-context-learning) of neural nets against another (fine-tuning) in a very elegant way that will be hard to protect against.

Microsoft lays a limitation of ChatGPT and friends bare

Microsoft researchers published a very informative paper on their pretty smart way to let GenAI do ‘bad’ things (i.e. ‘jailbreaking’). They actually set two aspects of the fundamental operation of these models against each other.

Keep readingThe (final) important aspect of Generative AI to look at is memorisation, that is when Generative AI models such as GPT and Midjourney are able to correctly reproduce information. This is both sought-after — after all if you want the text of Shakespeare’s Sonnet 18, you want the real thing, not a confabulation. Reliability of what these models produce is often important. But that same sought-after quality is a quagmire of things you do not want: data leakage, loss of confidentiality, plagiarism. It turns out, we’re probably looking at a fundamental limit of Generative AI.

Memorisation: the deep problem of Midjourney, ChatGPT, and friends

If we ask GPT to get us “that poem that compares the loved one to a summer’s day” we want it to produce the actual Shakespeare Sonnet 18, not some confabulation. And it does. It has memorised this part of the training data. This is both sought-after and problematic and provides a fundamental limit for…

Keep readingBrian Merchant, author of the book Blood in the Machine (a book about the start of the Industrial Revolution), has made a very astute observation about Generative AI and its effect on jobs. That observation is as insightful as it is outside of most discussions: Generative AI doesn’t need to be good to be disruptive. Even if all the criticisms (like those shown in this series) are correct, GenAI can still have an effect. And that effect is the same as that of the first machines during the Industrial Revolution: the introduction of the catergory ‘cheap’ (both meanings) in certain areas, e.g. creative work like making illustrations. But with a twist: the early machines were independent weavers, pottery makers, etc.. But GenAI is parasiting on the skills of the original autors. GenAI doesn’t copy their works, true, but it — poorly — copies their skills. That is new.

The GenAI bubble is going to burts, but that doesn’t mean GenAI is going away. The dotcom bubble burst too, but the internet stayed. Will GenAI’s bust be like that?

Generative AI doesn’t copy art, it ‘clones’ the artisans — cheaply

The early machines at the beginning of the Industrial Revolution produced ‘cheap’ (in both meanings) products and it was the introduction of that ‘cheap’ category that was actually disruptive. In the same way, where ‘cheap’ is acceptable (and no: that isn’t coding), GenAI may disrupt today.

But there is a difference. Early machines were separate…

Keep readingGoogle Gemini (see above) improves on benchmark results compared to GPT4, but the way it does that is remarkable and has little to do with actually improving the LLM, but using it differently. ChatGPT has acquired a better way of doing arithmetic which produces less errors, but the way it does that is equally remarkable and has nothing to do with actually improving the LLM. There is an elephant in the room: What these improvements at the ‘chat’ level have in common is that they aren’t any improvements on LLMs themselves, but are attempts to engineer around the (fundamental) limitations of LLMs. LLMs, in other words, are plateauing, it seems.

The Department of “Engineering The Hell Out Of AI”

ChatGPT has acquired the functionality of recognising an arithmetic question and reacting to it with on-the-fly creating python code, executing it, and using it to generate the response. Gemini’s contains an interesting trick Google plays to improve benchmark results.

These (inspired) engineering tricks lead to an interesting conclusion about the state of LLMs.

Keep readingYet more ‘engineering the hell out of…’.

The second half of 2024 and the beginning of 2025 has seen the arrival of socalled ‘reasoning models’. Large Language Models specifically built and trained to be good at ‘reasoning’. And there have been some impressive results, like GPT4-o3 doing very well on Math Olympiad questions or the ARC-AGI-1-PUB benchmark. However, if you look a bit deeper it turns out these models do not really reason. Instead they still approximate, but in an indirect way. The story starts with a build-up on the various ways models can scale and the effects of scaling.

Generative AI ‘reasoning models’ don’t reason, even if it seems they do

‘Reasoning models’ such as GPT4-o3 have become a well known member of the Generative AI family. But look inside and while they add a certain depth, at the same time they add nothing at all. Not ‘reasoning’ anyway. Just another ‘level of indirection’ when approximating. Sometimes powerful. Always costly.

Keep readingSummarising text is one of the use cases for LLMs that is seen as pretty realistic, and I saw it so too. I experienced summarising by LLMs first hand recently (in a professional setting) and the experience taught me a few things about this use case that are worth sharing. Surprising conclusion: when LLMs are asked to summarise, the result looks like summarising, but it is something else.

When ChatGPT summarises, it actually does nothing of the kind.

One of the use cases I thought was reasonable to expect from ChatGPT and Friends (LLMs) was summarising. It turns out I was wrong. What ChatGPT isn’t summarising at all, it only looks like it. What it does is something else and that something else only becomes summarising in very specific circumstances.

Keep readingResearchers have investigated over and covert racism in LLMs. They come to some conclusions that should act as a warning. While overt (visible) racism has been going down, the hidden racism (with unknown, but potentially very harmful effects) is still there and has actually gotten worse. It is potentially a clear example of the limits of fine-tuning the models and there is a potential double whammy: as overt racism (or other -isms) shrinks, we humans might start to trust the models more on this respect, while within the models, hidden from view, but potentially harmful nonetheless, the covert racism may have grown. There are some caveats, but the signs are bad.

Ain’t No Lie — The unsolvable(?) prejudice problem in ChatGPT and friends

Thanks to Gary Marcus, I found out about this research paper. And boy, is this is both a clear illustration of a fundamental flaw at the heart of Generative AI, as well as uncovering a doubly problematic and potentially unsolvable problem: fine-tuning of LLMs may often only hide harmful behaviour, not remove it.

Keep readingA short clip of Ilya Sutskever has been published on YouTube in which he explains why he is convinced that the current LLM technology of ‘token prediction’ can lead to superhuman intelligence. I have created a cleaned up transcript of this (with a few comments) because the argument he gives is important to read.

What makes Ilya Sutskever believe that superhuman AI is a natural extension of Large Language Models?

I came across a 2 minute video where Ilya Sutskever — OpenAI’s chief scientist — explains why he thinks current ‘token-prediction’ large language models will be able to become superhuman intelligences. How? Just ask them to act like one.

Keep readingSam Altman reportedly has (early 2024) been making the rounds to get $7 trillion of financing for a massive increase in AI-chip production. His remarks seem to suggest (never very clear or transparent, our Sam) that he — like Ilya — believes that Generative AI will grow into Artificial General Intelligence, systems that are as intelligent as humans, or even more. But OpenAI’s own data suggests that even more sizing — we already were at the current sizes around 2019 — is a dead end.

Will Sam Altman’s $7 Trillion Plan Rescue AI?

Sam Altman wants $7 trillion for AI chip manufacturing. Some call it an audacious ‘moonshot’. Grady Booch has remarked that such scaling requirements show that your architecture is wrong. Can we already say something about how large we have to scale current approaches to get to computers as intelligent as humans — as Sam intends?…

Keep readingThe way we humans talk about ChatGPT and Friends and the way ChatGPT and Friends describe themselves are misleading us by something what Uncle Ludwig called Bewitchment by Language.

GPT and Friends bamboozle us big time

After watching my talk that explains GPT in a non-technical way, someone asked GPT to write critically about its own lack of understanding. The result is illustrative, and useful. “Seeing is believing”, true, but “believing is seeing” as well.

Keep readingAs we are talking about Artificial Intelligence, it turns out that talking about our own — human — intelligence is key. When are we convinced of something (i.e. “Intelligent Machines are Nigh!”)? How do our convictions influence our observations and reasoning (quite a lot, it turns out). How does our architecture compare to that of digital computers?

On the Psychology of Architecture and the Architecture of Psychology

[Sticky] About the role ‘convictions’ play in human intelligence, starting from the practical situations ‘advisors’ — such as IT advisors — find themselves in.

Advisors need (a) to know what they are talking about and (b) be able to convince others. For architects, the first part is called ‘architecture’ and the second part could…

Keep readingThis is the entire talk from 10 October 2023. There are overlaps between all entries here, but this one contains the two technical explanations with more context and more robustly.

Een Nederlands gesproken, meer uitgebreide, versie van 24 februari 2024, met ook wat nieuwe ontwikkelingen er in meegenomen. Wel langer…

Some earlier stories related to the rise of large generative models:

Before the advent of ChatGPT and friends (Generative AI, the very large neural networks — thanks to the transformer architecture) there was already to occasional fever about AI, such as about ‘cognitive computing’ (neural networks in general), written about here

[You do not have my permission to use any content on this site for training a Generative AI (or any comparable use), unless you can guarantee your system never misrepresents my content and provides a proper reference (URL) to the original in its output. If you want to use it in any other way, you need my explicit permission]