Microsoft researchers published a very informative paper on their pretty smart way to let GenAI do 'bad' things (i.e. 'jailbreaking'). They actually set two aspects of the fundamental operation of these models against each other.

Category: Background Knowledge

Ain’t No Lie — The unsolvable(?) prejudice problem in ChatGPT and friends

Thanks to Gary Marcus, I found out about this research paper. And boy, is this is both a clear illustration of a fundamental flaw at the heart of Generative AI, as well as uncovering a doubly problematic and potentially unsolvable problem: fine-tuning of LLMs may often only hide harmful behaviour, not remove it.

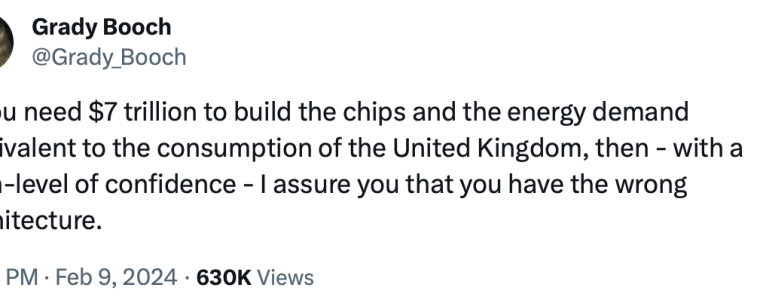

Will Sam Altman’s $7 Trillion Plan Rescue AI?

Sam Altman wants $7 trillion for AI chip manufacturing. Some call it an audacious 'moonshot'. Grady Booch has remarked that such scaling requirements show that your architecture is wrong. Can we already say something about how large we have to scale current approaches to get to computers as intelligent as humans — as Sam intends? Yes we can.

The Department of “Engineering The Hell Out Of AI”

ChatGPT has acquired the functionality of recognising an arithmetic question and reacting to it with on-the-fly creating python code, executing it, and using it to generate the response. Gemini's contains an interesting trick Google plays to improve benchmark results. These (inspired) engineering tricks lead to an interesting conclusion about the state of LLMs.

Memorisation: the deep problem of Midjourney, ChatGPT, and friends

If we ask GPT to get us "that poem that compares the loved one to a summer's day" we want it to produce the actual Shakespeare Sonnet 18, not some confabulation. And it does. It has memorised this part of the training data. This is both sought-after and problematic and provides a fundamental limit for the reliability of these models.

What makes Ilya Sutskever believe that superhuman AI is a natural extension of Large Language Models?

I came across a 2 minute video where Ilya Sutskever — OpenAI's chief scientist — explains why he thinks current 'token-prediction' large language models will be able to become superhuman intelligences. How? Just ask them to act like one.

State of the Art Gemini, GPT and friends take a shot at learning

Google’s Gemini has arrived. Google has produced videos, a blog, a technical background paper, and more. According to Google: "Gemini surpasses state-of-the-art performance on a range of benchmarks including text and coding." But hidden in the grand words lies another generally overlooked aspect of Large Language Models which is important to understand. And when we use that aspect to try to trip up GPT, we see something peculiar. Shenanigans, shenanigans.

Artificial General Intelligence is Nigh! Rejoice! Be very afraid!

Should we be hopeful or scared about imminent machines that are as intelligent or more than humans? Surprisingly, this debate is even older than computers, and from the mathematician Ada Lovelace comes an interesting observation that is as valid now as it was when she made it in 1842.

GPT and Friends bamboozle us big time

After watching my talk that explains GPT in a non-technical way, someone asked GPT to write critically about its own lack of understanding. The result is illustrative, and useful. "Seeing is believing", true, but "believing is seeing" as well.

The hidden meaning of the errors of ChatGPT (and friends)

We should stop labelling the wrong results of ChatGPT and friends (the 'hallucinations') as 'errors'. Even Sam Altman — CEO of OpenAI — agrees, they are more 'features' than 'bugs' he has said. But why is that? And why should we not call them errors?